CDN

Introduction

“Use a Content Delivery Network” was one of Steve Souders original recommendations for making web sites load faster. It’s advice that remains valid today, and in this chapter of the Web Almanac we’re going to explore how widely Steve’s recommendation has been adopted, how sites are using Content Delivery Networks (CDNs), and some of the features they’re using.

Fundamentally, CDNs reduce latency—the time it takes for packets to travel between two points on a network, say from a visitor’s device to a server—and latency is a key factor in how quickly pages load.

A CDN reduces latency in two ways: by serving content from locations that are closer to the user and second, by terminating the TCP connection closer to the end user.

Historically, CDNs were used to cache, or copy, bytes so that the logical path from the user to the bytes becomes shorter. A file that is requested by many people can be retrieved once from the origin (your server) and then stored on a server closer to the user, thus saving transfer time.

CDNs also help with TCP latency. The latency of TCP determines how long it takes to establish a connection between a browser and a server, how long it takes to secure that connection, and ultimately how quickly content downloads. At best, network packets travel at roughly two-thirds of the speed of light, so how long that round trip takes depends on how far apart the two ends of the conversation are, and what’s in between. Congested networks, overburdened equipment, and the type of network will all add further delays. Using a CDN to move the server end of the connection closer to the visitor reduces this latency penalty, shortening connection times, TLS negotiation times, and improving content download speeds.

Although CDNs are often thought of as just caches that store and serve static content close to the visitor, they are capable of so much more! CDNs aren’t limited to just helping overcome the latency penalty, and increasingly they offer other features that help improve performance and security.

- Using a CDN to proxy dynamic content (base HTML page, API responses, etc.) can take advantage of both the reduced latency between the browser and the CDN’s own network back to the origin.

- Some CDNs offer transformations that optimize pages so they download and render more quickly, or optimize images so they’re the appropriate size (both dimensions and file size) for the device on which they’re going to be viewed.

- From a security perspective, malicious traffic and bots can be filtered out by a CDN before the requests even reach the origin, and their wide customer base means CDNs can often see and react to new threats sooner.

- The rise of edge computing allows sites to run their own code close to their visitors, both improving performance and reducing the load on the origin.

Finally, CDNs also help sites to adopt new technologies without requiring changes at the origin, for example HTTP/2, TLS 1.3, and/or IPv6 can be enabled from the edge to the browser, even if the origin servers don’t support it yet.

Caveats and disclaimers

As with any observational study, there are limits to the scope and impact that can be measured. The statistics gathered on CDN usage for the Web Almanac does not imply performance nor effectiveness of a specific CDN vendor.

There are many limits to the testing methodology used for the Web Almanac. These include:

- Simulated network latency: The Web Almanac uses a dedicated network connection that synthetically shapes traffic.

- Single geographic location: Tests are run from a single datacenter and cannot test the geographic distribution of many CDN vendors.

- Cache effectiveness: Each CDN uses proprietary technology and many, for security reasons, do not expose cache performance.

- Localization and internationalization: Just like geographic distribution, the effects of language and geo-specific domains are also opaque to the testing.

- CDN detection is primarily done through DNS resolution and HTTP headers. Most CDNs use a DNS CNAME to map a user to an optimal datacenter. However, some CDNs use AnyCast IPs or direct A+AAAA responses from a delegated domain which hide the DNS chain. In other cases, websites use multiple CDNs to balance between vendors which is hidden from the single-request pass of WebPageTest. All of this limits the effectiveness in the measurements.

Most importantly, these results reflect a potential utilization but do not reflect actual impact. YouTube is more popular than “ShoesByColin” yet both will appear as equal value when comparing utilization.

With this in mind, there are a few intentional statistics that were not measured with the context of a CDN:

- TTFB: Measuring the Time to first byte by CDN would be intellectually dishonest without proper knowledge about cacheability and cache effectiveness. If one site uses a CDN for round trip time (RTT) management but not for caching, this would create a disadvantage when comparing another site that uses a different CDN vendor but does also caches the content. (Note: this does not apply to the TTFB analysis in the Performance chapter because it does not draw conclusions about the performance of individual CDNs.)

- Cache Hit vs. Cache Miss performance: As mentioned previously, this is opaque to the testing apparatus and therefore repeat tests to test page performance with a cold cache vs. a hot cache are unreliable.

Further stats

In future versions of the Web Almanac, we would expect to look more closely at the TLS and RTT management between CDN vendors. Of interest would the impact of OCSP stapling, differences in TLS Cipher performance. CWND (TCP congestion window) growth rate, and specifically the adoption of BBR v1, v2, and traditional TCP Cubic.

CDN adoption and usage

For websites, a CDN can improve performance for the primary domain (www.shoesbycolin.com), sub-domains or sibling domains (images.shoesbycolin.com or checkout.shoesbycolin.com), and finally third parties (Google Analytics, etc.). Using a CDN for each of these use cases improves performance in different ways.

Historically, CDNs were used exclusively for static resources like CSS, JavaScript, and images. These resources would likely be versioned (include a unique number in the path) and cached long-term. In this way we should expect to see higher adoption of CDNs on sub-domains or sibling domains compared to the base HTML domains. The traditional design pattern would expect that www.shoesbycolin.com would serve HTML directly from a datacenter (or origin) while static.shoesbycolin.com would use a CDN.

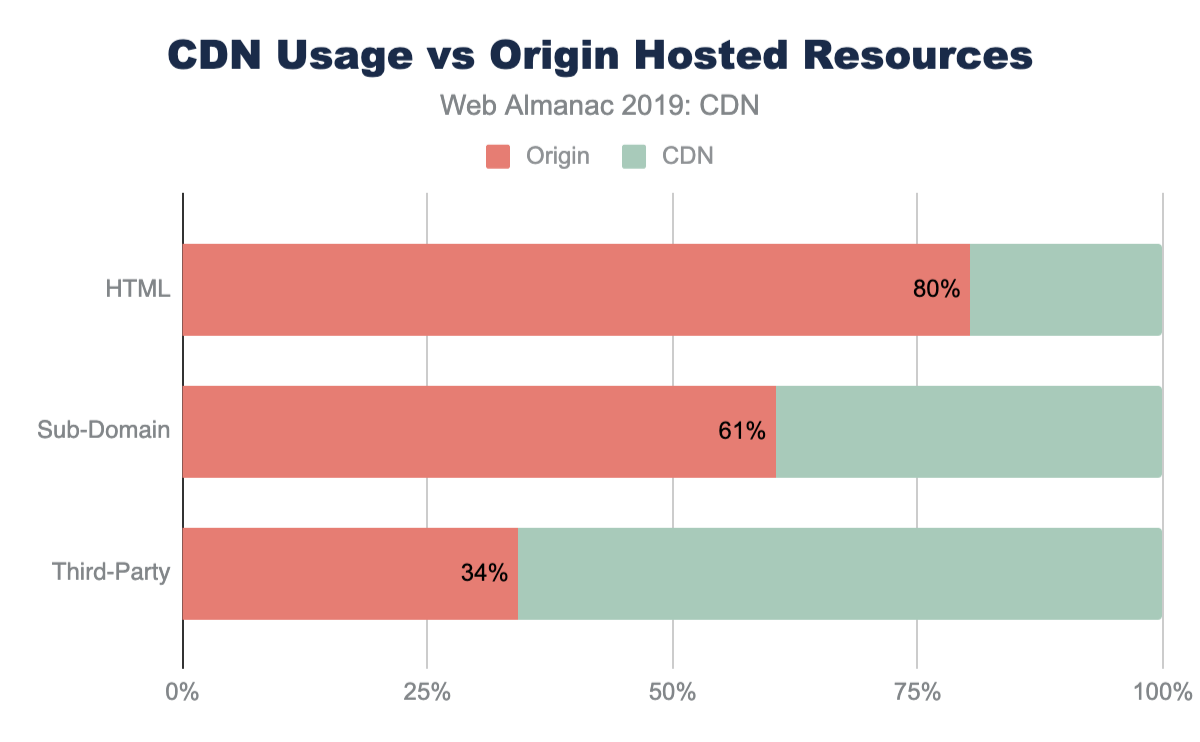

Indeed, this traditional pattern is what we observe on the majority of websites crawled. The majority of web pages (80%) serve the base HTML from origin. This breakdown is nearly identical between mobile and desktop with only 0.4% lower usage of CDNs on desktop. This slight variance is likely due to the small continued use of mobile specific web pages (“mDot”), which more frequently use a CDN.

Likewise, resources served from sub-domains are more likely to utilize a CDN at 40% of sub-domain resources. Sub-domains are used either to partition resources like images and CSS or they are used to reflect organizational teams such as checkout or APIs.

Despite first-party resources still largely being served directly from origin, third-party resources have a substantially higher adoption of CDNs. Nearly 66% of all third-party resources are served from a CDN. Since third-party domains are more likely a SaaS integration, the use of CDNs are more likely core to these business offerings. Most third-party content breaks down to shared resources (JavaScript or font CDNs), augmented content (advertisements), or statistics. In all these cases, using a CDN will improve the performance and offload for these SaaS solutions.

Top CDN providers

There are two categories of CDN providers: the generic and the purpose-fit CDN. The generic CDN providers offer customization and flexibility to serve all kinds of content for many industries. In contrast, the purpose-fit CDN provider offers similar content distribution capabilities but are narrowly focused on a specific solution.

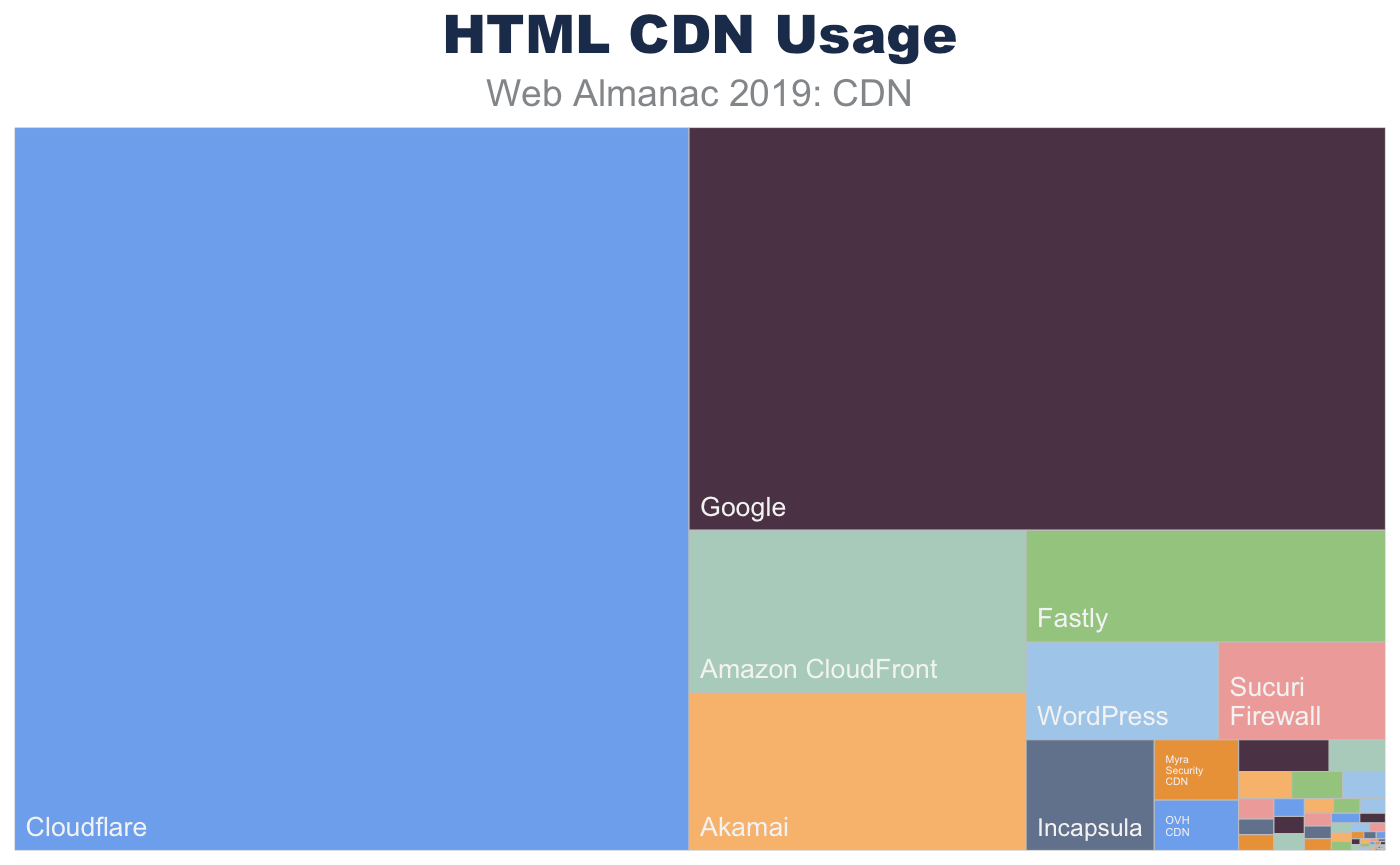

This is clearly represented when looking at the top CDNs found serving the base HTML content. The most frequent CDNs serving HTML are generic CDNs (Cloudflare, Akamai, Fastly) and cloud solution providers who offer a bundled CDN (Google, Amazon) as part of the platform service offerings. In contrast, there are only a few purpose-fit CDN providers, such as Wordpress and Netlify, that deliver base HTML markup.

| HTML CDN Usage (%) | |

|---|---|

| ORIGIN | 80.39 |

| Cloudflare | 9.61 |

| 5.54 | |

| Amazon CloudFront | 1.08 |

| Akamai | 1.05 |

| Fastly | 0.79 |

| WordPress | 0.37 |

| Sucuri Firewall | 0.31 |

| Incapsula | 0.28 |

| Myra Security CDN | 0.1 |

| OVH CDN | 0.08 |

| Netlify | 0.06 |

| Edgecast | 0.04 |

| GoCache | 0.03 |

| Highwinds | 0.03 |

| CDNetworks | 0.02 |

| Limelight | 0.01 |

| Level 3 | 0.01 |

| NetDNA | 0.01 |

| StackPath | 0.01 |

| Instart Logic | 0.01 |

| Azion | 0.01 |

| Yunjiasu | 0.01 |

| section.io | 0.01 |

| Microsoft Azure | 0.01 |

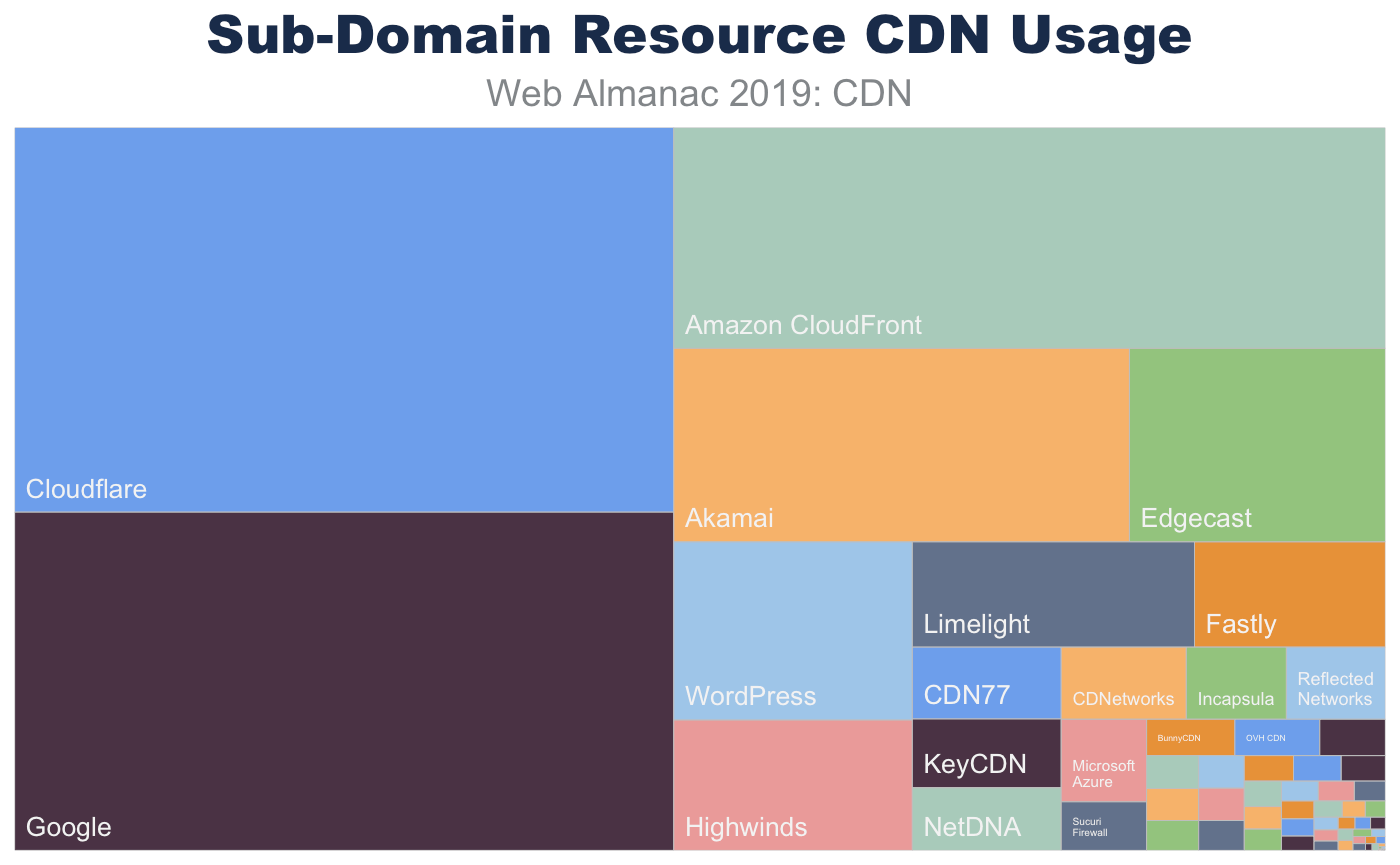

Sub-domain requests have a very similar composition. Since many websites use sub-domains for static content, we see a shift to a higher CDN usage. Like the base page requests, the resources served from these sub-domains utilize generic CDN offerings.

| Sub-Domain CDN Usage (%) | |

|---|---|

| ORIGIN | 60.56 |

| Cloudflare | 10.06 |

| 8.86 | |

| Amazon CloudFront | 6.24 |

| Akamai | 3.5 |

| Edgecast | 1.97 |

| WordPress | 1.69 |

| Highwinds | 1.24 |

| Limelight | 1.18 |

| Fastly | 0.8 |

| CDN77 | 0.43 |

| KeyCDN | 0.41 |

| NetDNA | 0.37 |

| CDNetworks | 0.36 |

| Incapsula | 0.29 |

| Microsoft Azure | 0.28 |

| Reflected Networks | 0.28 |

| Sucuri Firewall | 0.16 |

| BunnyCDN | 0.13 |

| OVH CDN | 0.12 |

| Advanced Hosters CDN | 0.1 |

| Myra Security CDN | 0.07 |

| CDNvideo | 0.07 |

| Level 3 | 0.06 |

| StackPath | 0.06 |

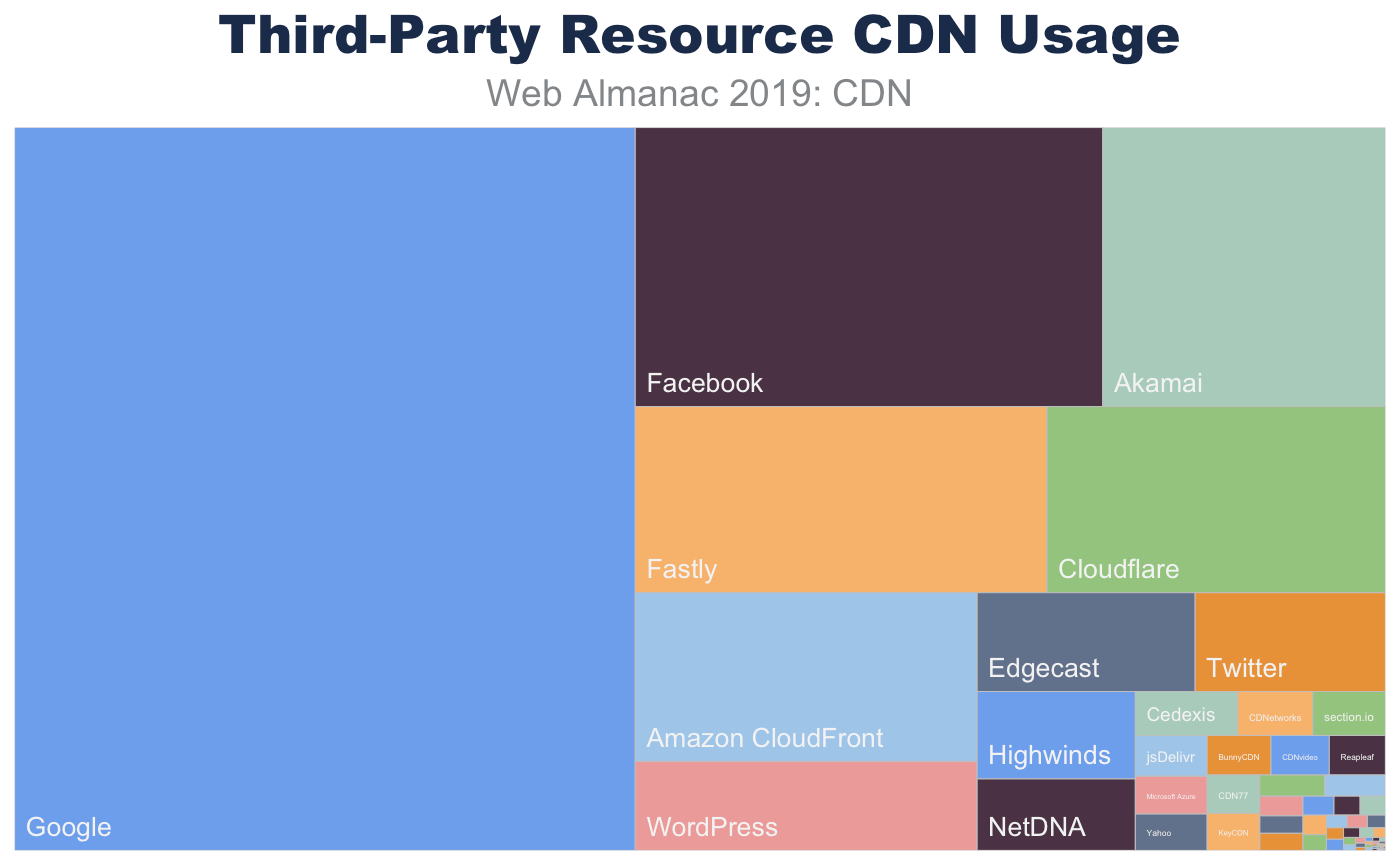

The composition of top CDN providers dramatically shifts for third-party resources. Not only are CDNs more frequently observed hosting third-party resources, there is also an increase in purpose-fit CDN providers such as Facebook, Twitter, and Google.

| Third-Party CDN Usage (%) | |

|---|---|

| ORIGIN | 34.27 |

| 29.61 | |

| 8.47 | |

| Akamai | 5.25 |

| Fastly | 5.14 |

| Cloudflare | 4.21 |

| Amazon CloudFront | 3.87 |

| WordPress | 2.06 |

| Edgecast | 1.45 |

| 1.27 | |

| Highwinds | 0.94 |

| NetDNA | 0.77 |

| Cedexis | 0.3 |

| CDNetworks | 0.22 |

| section.io | 0.22 |

| jsDelivr | 0.2 |

| Microsoft Azure | 0.18 |

| Yahoo | 0.18 |

| BunnyCDN | 0.17 |

| CDNvideo | 0.16 |

| Reapleaf | 0.15 |

| CDN77 | 0.14 |

| KeyCDN | 0.13 |

| Azion | 0.09 |

| StackPath | 0.09 |

RTT and TLS management

CDNs can offer more than simple caching for website performance. Many CDNs also support a pass-through mode for dynamic or personalized content when an organization has a legal or other business requirement prohibiting the content from being cached. Utilizing a CDN’s physical distribution enables increased performance for TCP RTT for end users. As others have noted, reducing RTT is the most effective means to improve web page performance compared to increasing bandwidth.

Using a CDN in this way can improve page performance in two ways:

-

Reduce RTT for TCP and TLS negotiation. The speed of light is only so fast and CDNs offer a highly distributed set of data centers that are closer to the end users. In this way the logical (and physical) distance that packets must traverse to negotiate a TCP connection and perform the TLS handshake can be greatly reduced.

Reducing RTT has three immediate benefits. First, it improves the time for the user to receive data, because TCP+TLS connection time are RTT-bound. Secondly, this will improve the time it takes to grow the congestion window and utilize the full amount of bandwidth the user has available. Finally, it reduces the probability of packet loss. When the RTT is high, network interfaces will time-out requests and resend packets. This can result in double packets being delivered.

CDNs can utilize pre-warmed TCP connections to the back-end origin. Just as terminating the connection closer to the user will improve the time it takes to grow the congestion window, the CDN can relay the request to the origin on pre-established TCP connections that have already maximized congestion windows. In this way the origin can return the dynamic content in fewer TCP round trips and the content can be more effectively ready to be delivered to the waiting user.

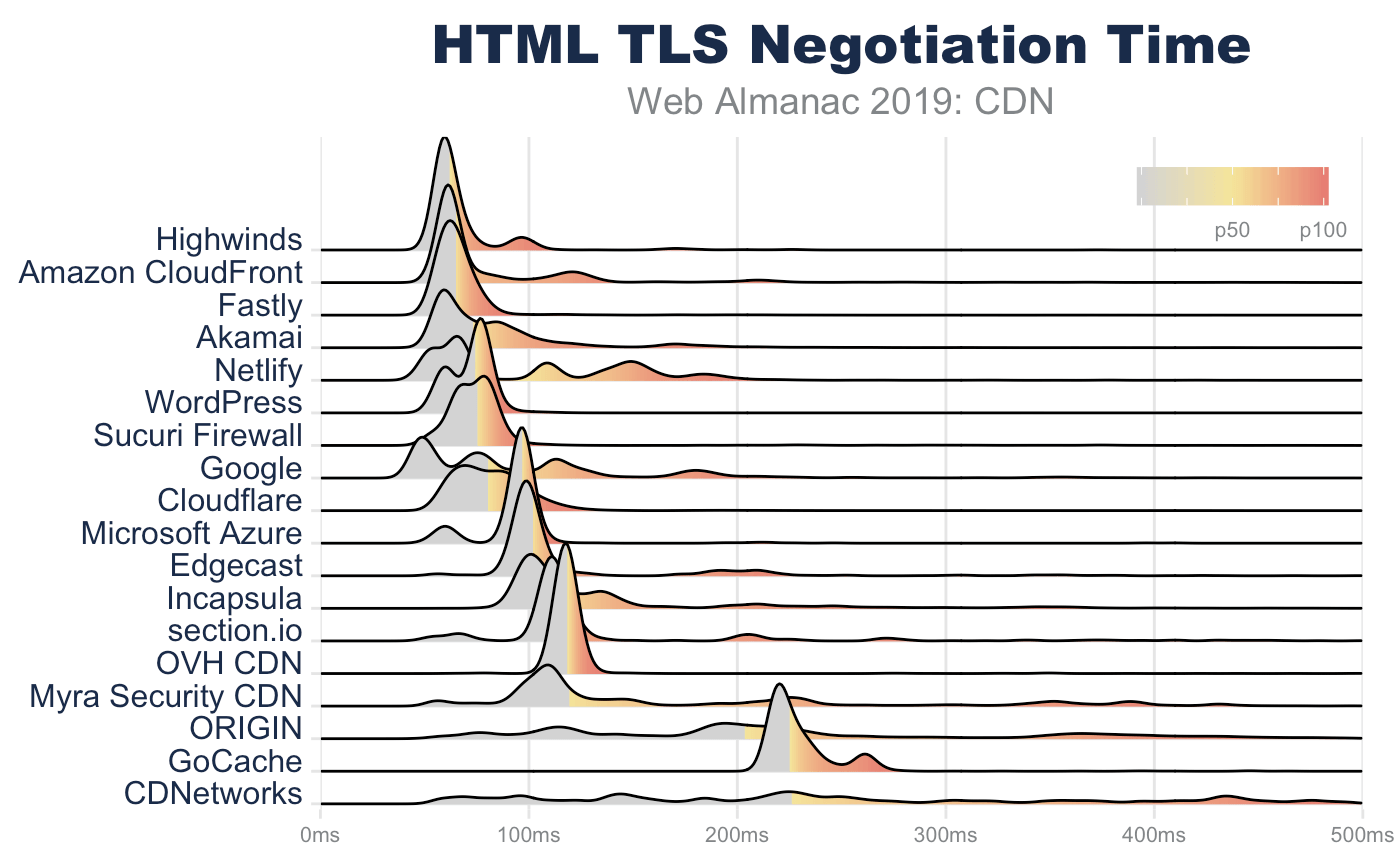

TLS negotiation time: origin 3x slower than CDNs

Since TLS negotiations require multiple TCP round trips before data can be sent from a server, simply improving the RTT can significantly improve the page performance. For example, looking at the base HTML page, the median TLS negotiation time for origin requests is 207 ms (for desktop WebPageTest). This alone accounts for 10% of a 2 second performance budget, and this is under ideal network conditions where there is no latency applied on the request.

In contrast, the median TLS negotiation for the majority of CDN providers is between 60 and 70 ms. Origin requests for HTML pages take almost 3x longer to complete TLS negotiation than those web pages that use a CDN. Even at the 90th percentile, this disparity perpetuates with origin TLS negotiation rates of 427 ms compared to most CDNs which complete under 140 ms!

| 10th percentile | 25th percentile | 50th percentile | 75th percentile | 90th percentile | |

|---|---|---|---|---|---|

| Highwinds | 58 | 58 | 60 | 66 | 94 |

| Fastly | 56 | 59 | 63 | 69 | 75 |

| WordPress | 58 | 62 | 76 | 77 | 80 |

| Sucuri Firewall | 63 | 66 | 77 | 80 | 86 |

| Amazon CloudFront | 59 | 61 | 62 | 83 | 128 |

| Cloudflare | 62 | 68 | 80 | 92 | 103 |

| Akamai | 57 | 59 | 72 | 93 | 134 |

| Microsoft Azure | 62 | 93 | 97 | 98 | 101 |

| Edgecast | 94 | 97 | 100 | 110 | 221 |

| 47 | 53 | 79 | 119 | 184 | |

| OVH CDN | 114 | 115 | 118 | 120 | 122 |

| section.io | 105 | 108 | 112 | 120 | 210 |

| Incapsula | 96 | 100 | 111 | 139 | 243 |

| Netlify | 53 | 64 | 73 | 145 | 166 |

| Myra Security CDN | 95 | 106 | 118 | 226 | 365 |

| GoCache | 217 | 219 | 223 | 234 | 260 |

| ORIGIN | 100 | 138 | 207 | 342 | 427 |

| CDNetworks | 85 | 143 | 229 | 369 | 452 |

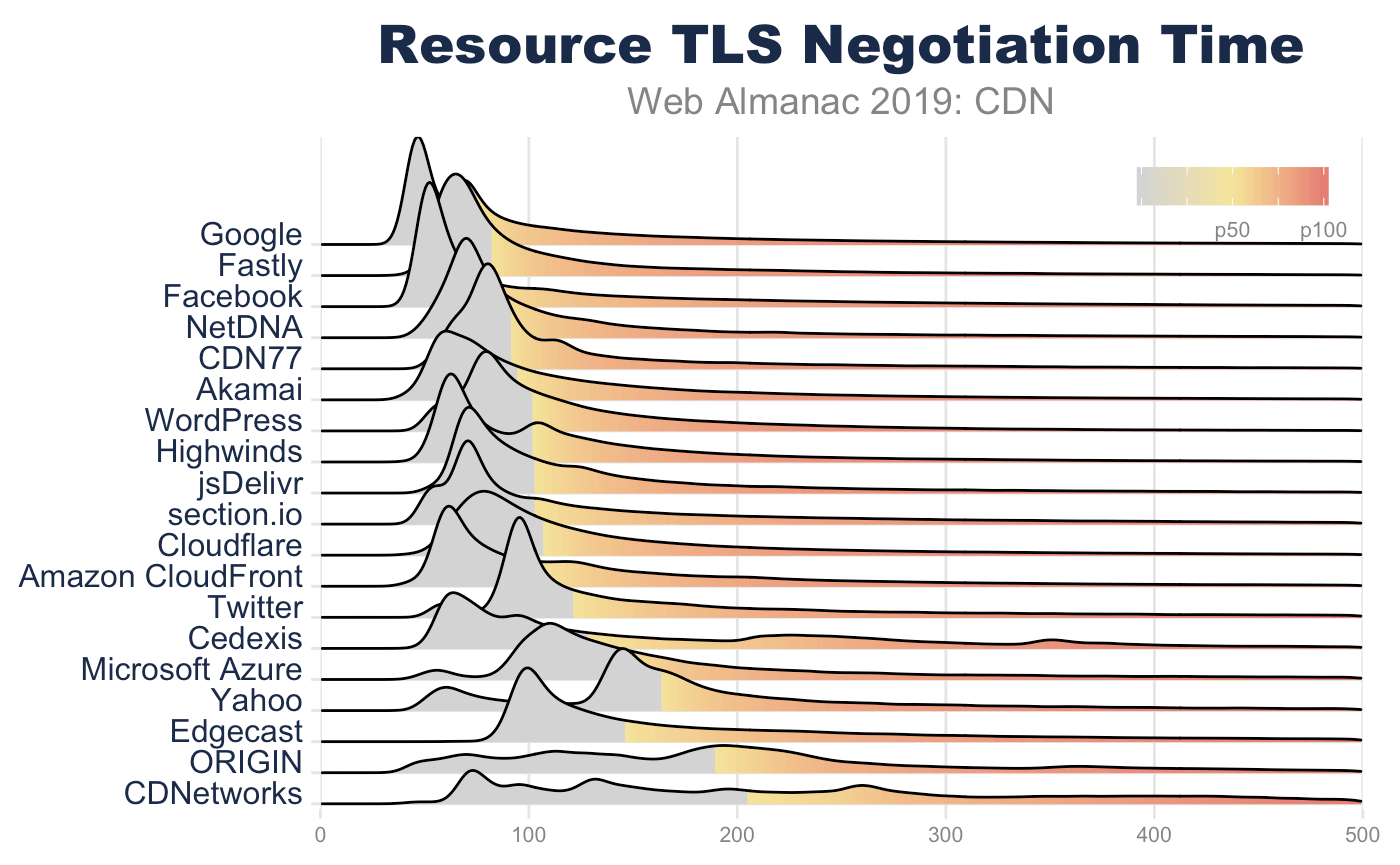

For resource requests (including same-domain and third-party), the TLS negotiation time takes longer and the variance increases. This is expected because of network saturation and network congestion. By the time that a third-party connection is established (by way of a resource hint or a resource request) the browser is busy rendering and making other parallel requests. This creates contention on the network. Despite this disadvantage, there is still a clear advantage for third-party resources that utilize a CDN over using an origin solution.

TLS handshake performance is impacted by a number of factors. These include RTT, TLS record size, and TLS certificate size. While RTT has the biggest impact on the TLS handshake, the second largest driver for TLS performance is the TLS certificate size.

During the first round trip of the TLS handshake, the server attaches its certificate. This certificate is then verified by the client before proceeding. In this certificate exchange, the server might include the certificate chain by which it can be verified. After this certificate exchange, additional keys are established to encrypt the communication. However, the length and size of the certificate can negatively impact the TLS negotiation performance, and in some cases, crash client libraries.

The certificate exchange is at the foundation of the TLS handshake and is usually handled by isolated code paths so as to minimize the attack surface for exploits. Because of its low level nature, buffers are usually not dynamically allocated, but fixed. In this way, we cannot simply assume that the client can handle an unlimited-sized certificate. For example, OpenSSL CLI tools and Safari can successfully negotiate against https://10000-sans.badssl.com. Yet, Chrome and Firefox fail because of the size of the certificate.

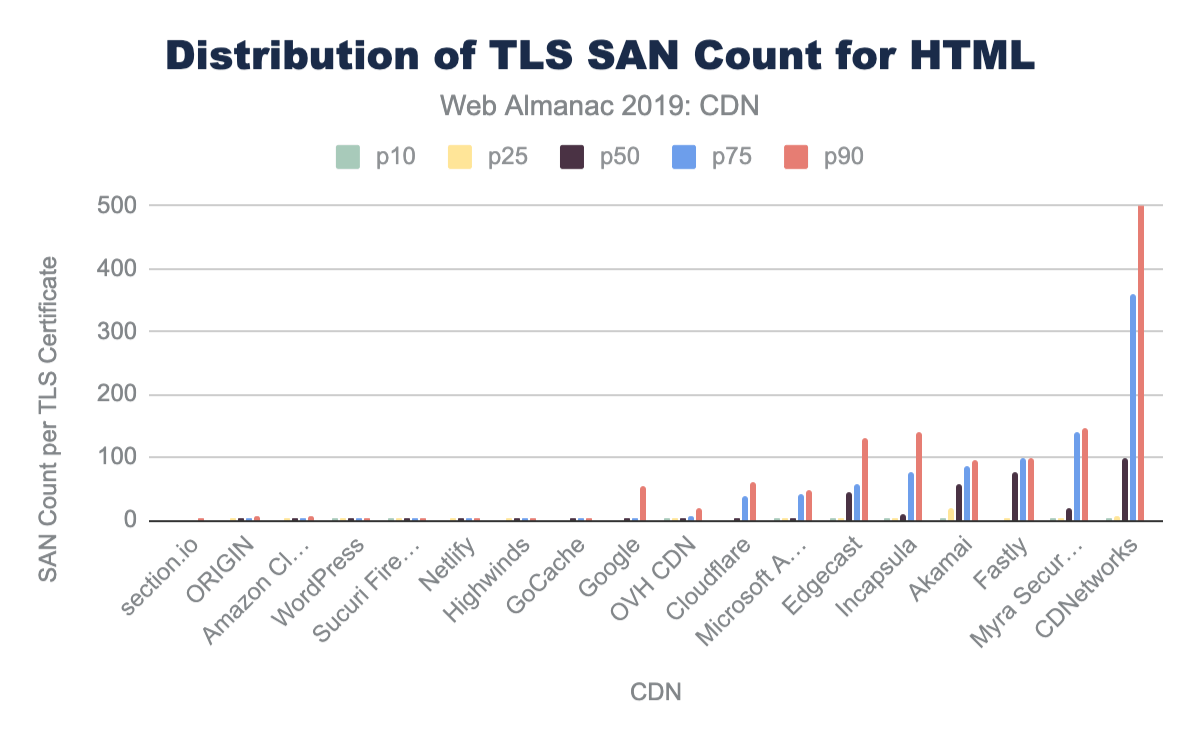

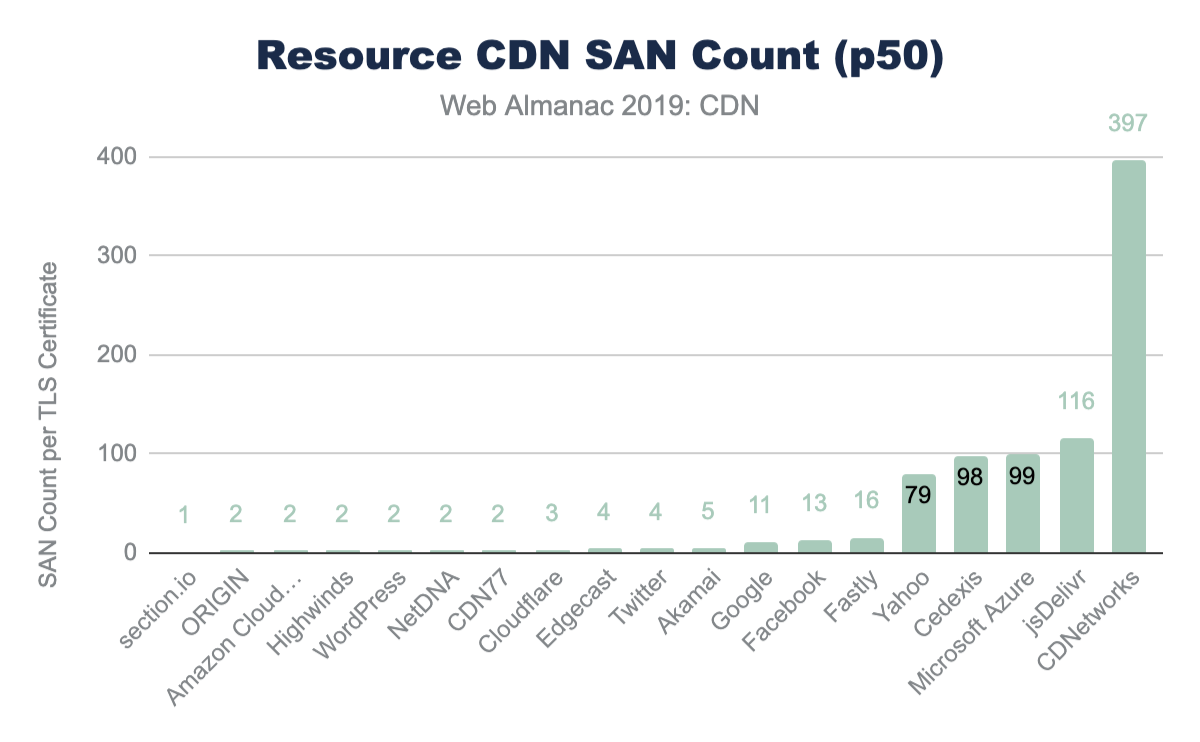

While extreme sizes of certificates can cause failures, even sending moderately large certificates has a performance impact. A certificate can be valid for one or more hostnames which are are listed in the Subject-Alternative-Name (SAN). The more SANs, the larger the certificate. It is the processing of these SANs during verification that causes performance to degrade. To be clear, performance of certificate size is not about TCP overhead, rather it is about processing performance of the client.

Technically, TCP slow start can impact this negotiation but it is very improbable. TLS record length is limited to 16 KB, which fits into a typical initial congestion window of 10. While some ISPs might employ packet splicers, and other tools fragment congestion windows to artificially throttle bandwidth, this isn’t something that a website owner can change or manipulate.

Many CDNs, however, depend on shared TLS certificates and will list many customers in the SAN of a certificate. This is often necessary because of the scarcity of IPv4 addresses. Prior to the adoption of Server-Name-Indicator (SNI) by end users, the client would connect to a server, and only after inspecting the certificate, would the client hint which hostname the user user was looking for (using the Host header in HTTP). This results in a 1:1 association of an IP address and a certificate. If you are a CDN with many physical locations, each location may require a dedicated IP, further aggravating the exhaustion of IPv4 addresses. Therefore, the simplest and most efficient way for CDNs to offer TLS certificates for websites that still have users that don’t support SNI is to offer a shared certificate.

According to Akamai, the adoption of SNI is still not 100% globally. Fortunately there has been a rapid shift in recent years. The biggest culprits are no longer Windows XP and Vista, but now Android apps, bots, and corporate applications. Even at 99% adoption, the remaining 1% of 3.5 billion users on the internet can create a very compelling motivation for website owners to require a non-SNI certificate. Put another way, a pure play website can enjoy a virtually 100% SNI adoption among standard web browsers. Yet, if the website is also used to support APIs or WebViews in apps, particularly Android apps, this distribution can drop rapidly.

Most CDNs balance the need for shared certificates and performance. Most cap the number of SANs between 100 and 150. This limit often derives from the certificate providers. For example, LetsEncrypt, DigiCert, and GoDaddy all limit SAN certificates to 100 hostnames while Comodo’s limit is 2,000. This, in turn, allows some CDNs to push this limit, cresting over 800 SANs on a single certificate. There is a strong negative correlation of TLS performance and the number of SANs on a certificate.

| 10th percentile | 25th percentile | 50th percentile | 75th percentile | 90th percentile | |

|---|---|---|---|---|---|

| section.io | 1 | 1 | 1 | 1 | 2 |

| ORIGIN | 1 | 2 | 2 | 2 | 7 |

| Amazon CloudFront | 1 | 2 | 2 | 2 | 8 |

| WordPress | 2 | 2 | 2 | 2 | 2 |

| Sucuri Firewall | 2 | 2 | 2 | 2 | 2 |

| Netlify | 1 | 2 | 2 | 2 | 3 |

| Highwinds | 1 | 2 | 2 | 2 | 2 |

| GoCache | 1 | 1 | 2 | 2 | 4 |

| 1 | 1 | 2 | 3 | 53 | |

| OVH CDN | 2 | 2 | 3 | 8 | 19 |

| Cloudflare | 1 | 1 | 3 | 39 | 59 |

| Microsoft Azure | 2 | 2 | 2 | 43 | 47 |

| Edgecast | 2 | 4 | 46 | 56 | 130 |

| Incapsula | 2 | 2 | 11 | 78 | 140 |

| Akamai | 2 | 18 | 57 | 85 | 95 |

| Fastly | 1 | 2 | 77 | 100 | 100 |

| Myra Security CDN | 2 | 2 | 18 | 139 | 145 |

| CDNetworks | 2 | 7 | 100 | 360 | 818 |

| 10th percentile | 25th percentile | 50th percentile | 75th percentile | 90th percentile | |

|---|---|---|---|---|---|

| section.io | 1 | 1 | 1 | 1 | 1 |

| ORIGIN | 1 | 2 | 2 | 3 | 10 |

| Amazon CloudFront | 1 | 1 | 2 | 2 | 6 |

| Highwinds | 2 | 2 | 2 | 3 | 79 |

| WordPress | 2 | 2 | 2 | 2 | 2 |

| NetDNA | 2 | 2 | 2 | 2 | 2 |

| CDN77 | 2 | 2 | 2 | 2 | 10 |

| Cloudflare | 2 | 3 | 3 | 3 | 35 |

| Edgecast | 2 | 4 | 4 | 4 | 4 |

| 2 | 4 | 4 | 4 | 4 | |

| Akamai | 2 | 2 | 5 | 20 | 54 |

| 1 | 10 | 11 | 55 | 68 | |

| 13 | 13 | 13 | 13 | 13 | |

| Fastly | 2 | 4 | 16 | 98 | 128 |

| Yahoo | 6 | 6 | 79 | 79 | 79 |

| Cedexis | 2 | 2 | 98 | 98 | 98 |

| Microsoft Azure | 2 | 43 | 99 | 99 | 99 |

| jsDelivr | 2 | 116 | 116 | 116 | 116 |

| CDNetworks | 132 | 178 | 397 | 398 | 645 |

TLS adoption

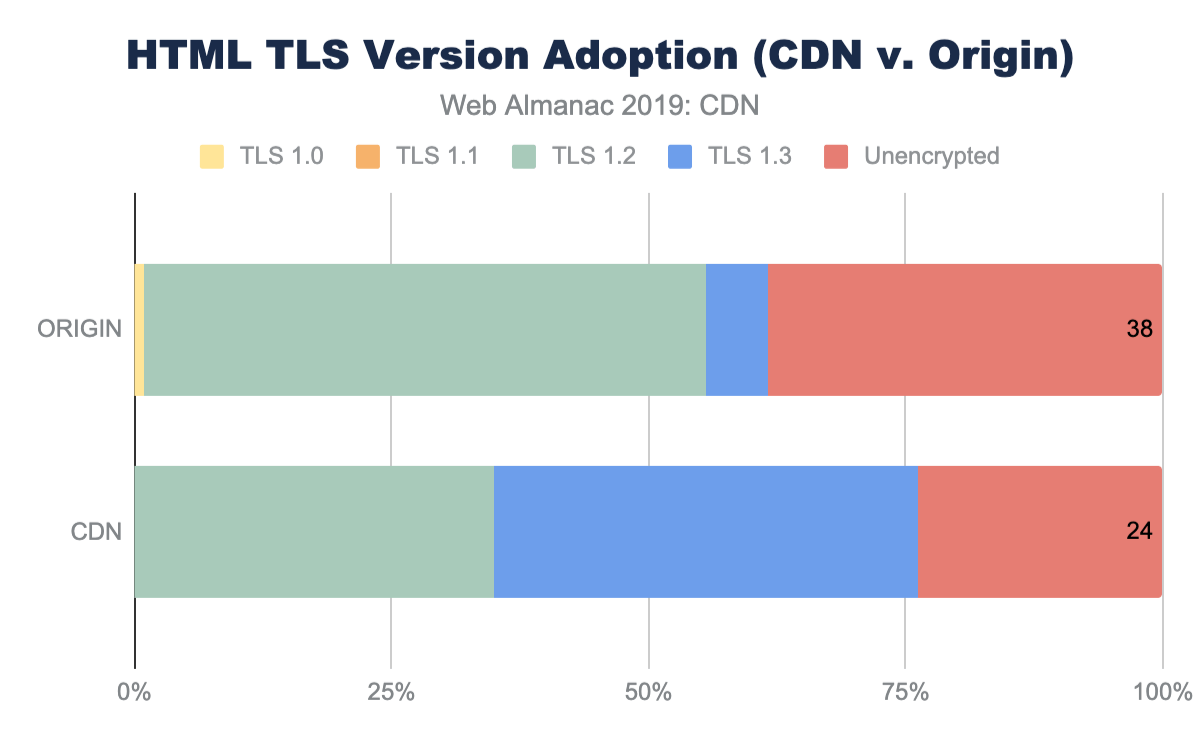

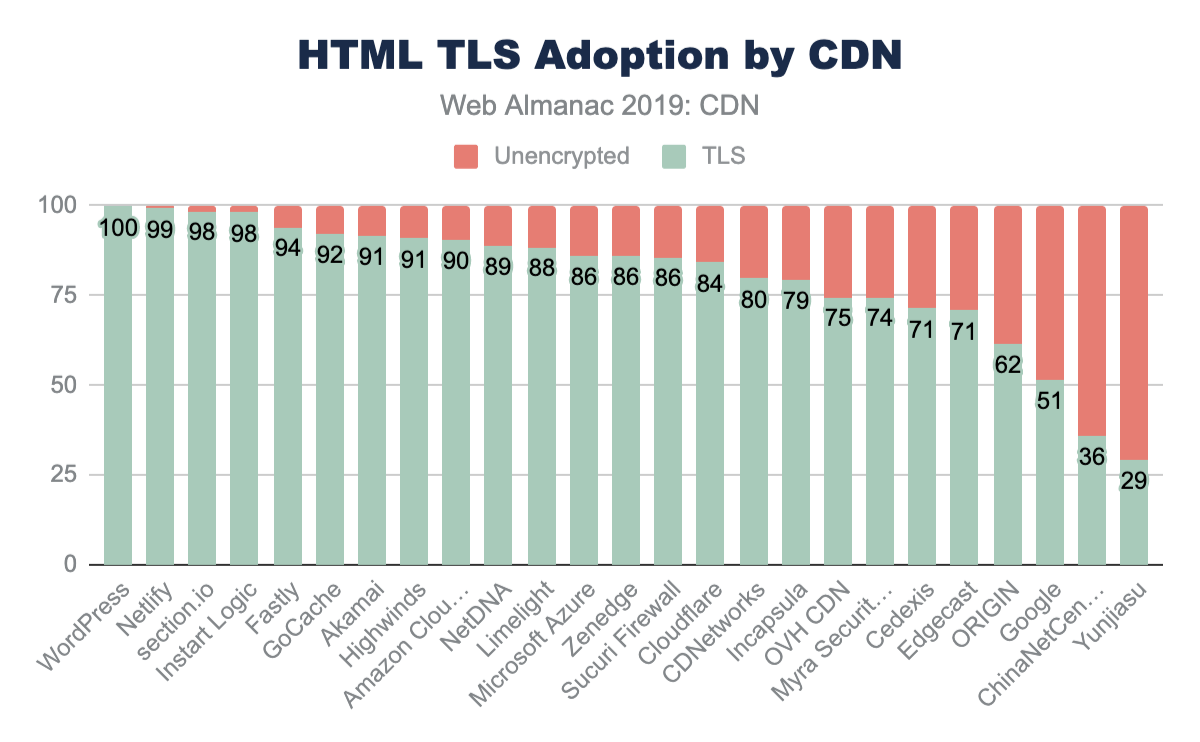

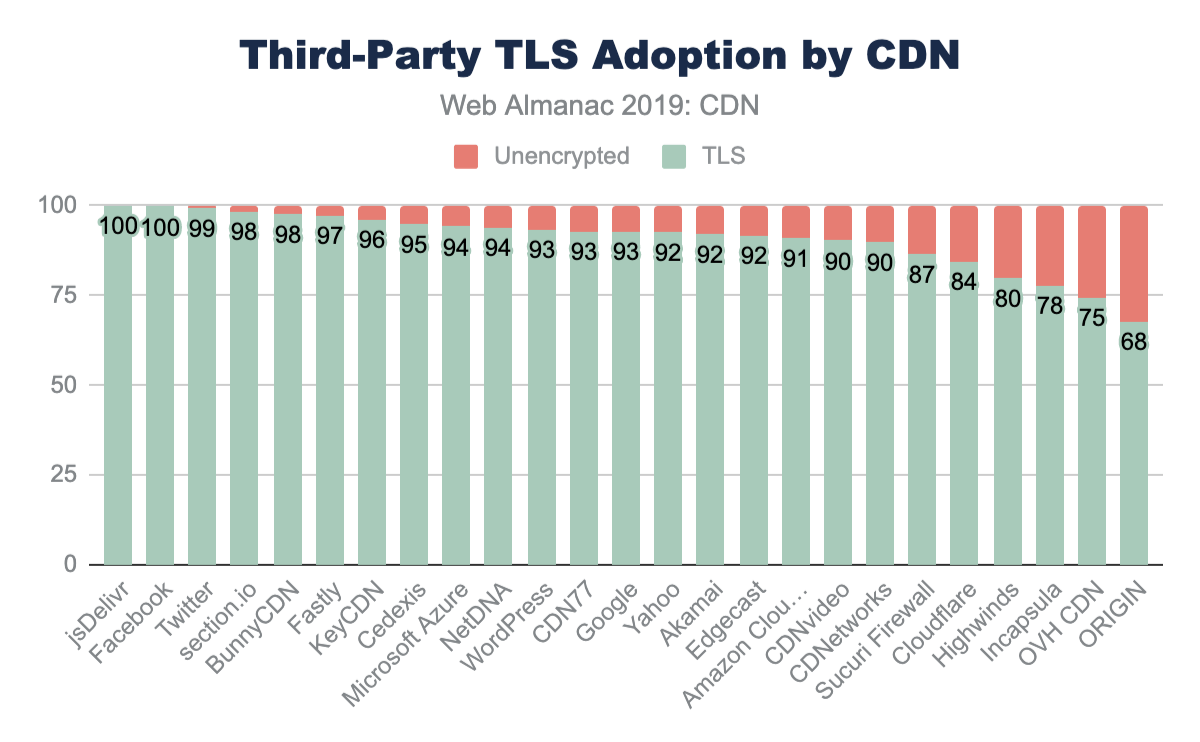

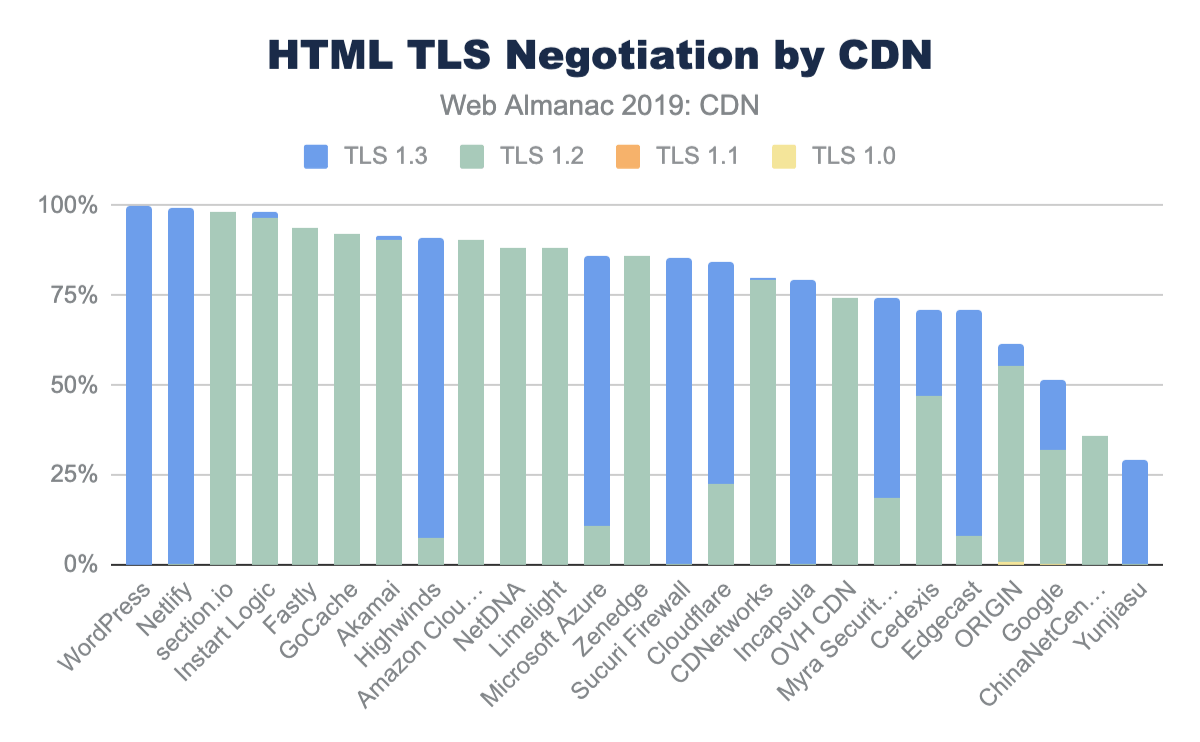

In addition to using a CDN for TLS and RTT performance, CDNs are often used to ensure patching and adoption of TLS ciphers and TLS versions. In general, the adoption of TLS on the main HTML page is much higher for websites that use a CDN. Over 76% of HTML pages are served with TLS compared to the 62% from origin-hosted pages.

Each CDN offers different rates of adoption for both TLS and the relative ciphers and versions offered. Some CDNs are more aggressive and roll out these changes to all customers whereas other CDNs require website owners to opt-in to the latest changes and offer change-management to facilitate these ciphers and versions.

Along with this general adoption of TLS, CDN use also sees higher adoption of emerging TLS versions like TLS 1.3.

In general, the use of a CDN is highly correlated with a more rapid adoption of stronger ciphers and stronger TLS versions compared to origin-hosted services where there is a higher usage of very old and compromised TLS versions like TLS 1.0.

More discussion of TLS versions and ciphers can be found in the Security and HTTP/2 chapters.

HTTP/2 adoption

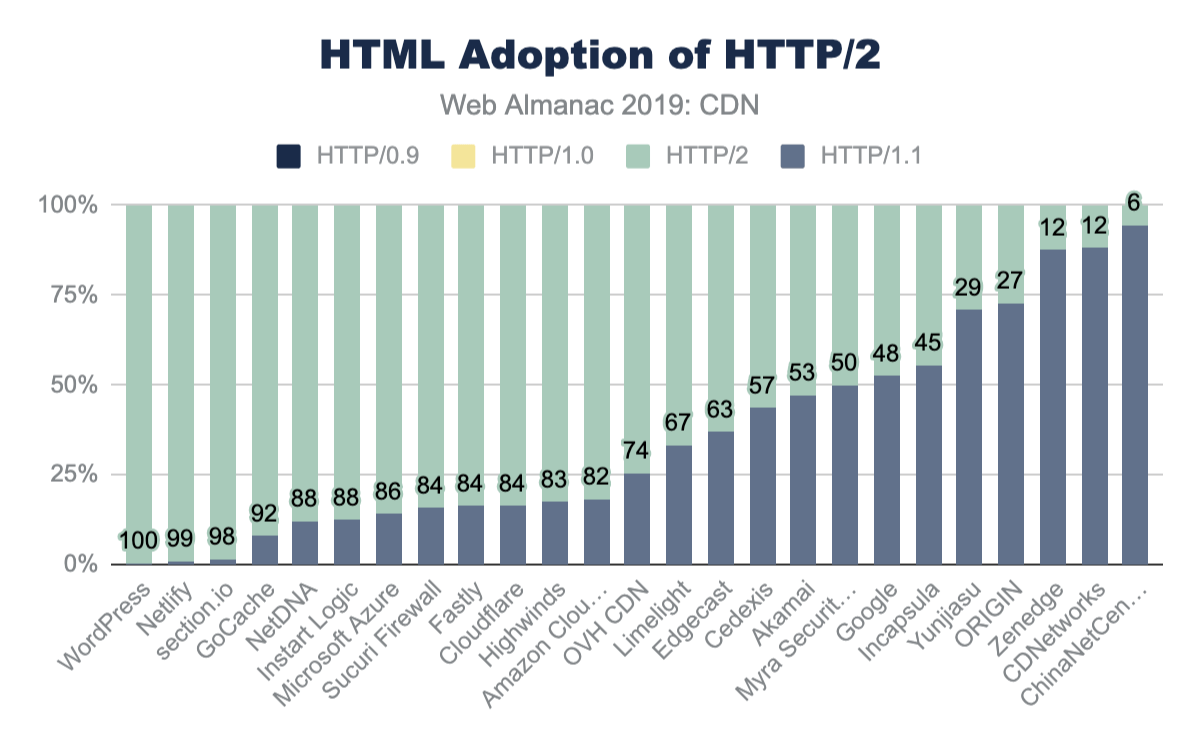

Along with RTT management and improving TLS performance, CDNs also enable new standards like HTTP/2 and IPv6. While most CDNs offer support for HTTP/2 and many have signaled early support of the still-under-standards-development HTTP/3, adoption still depends on website owners to enable these new features. Despite the change-management overhead, the majority of the HTML served from CDNs has HTTP/2 enabled.

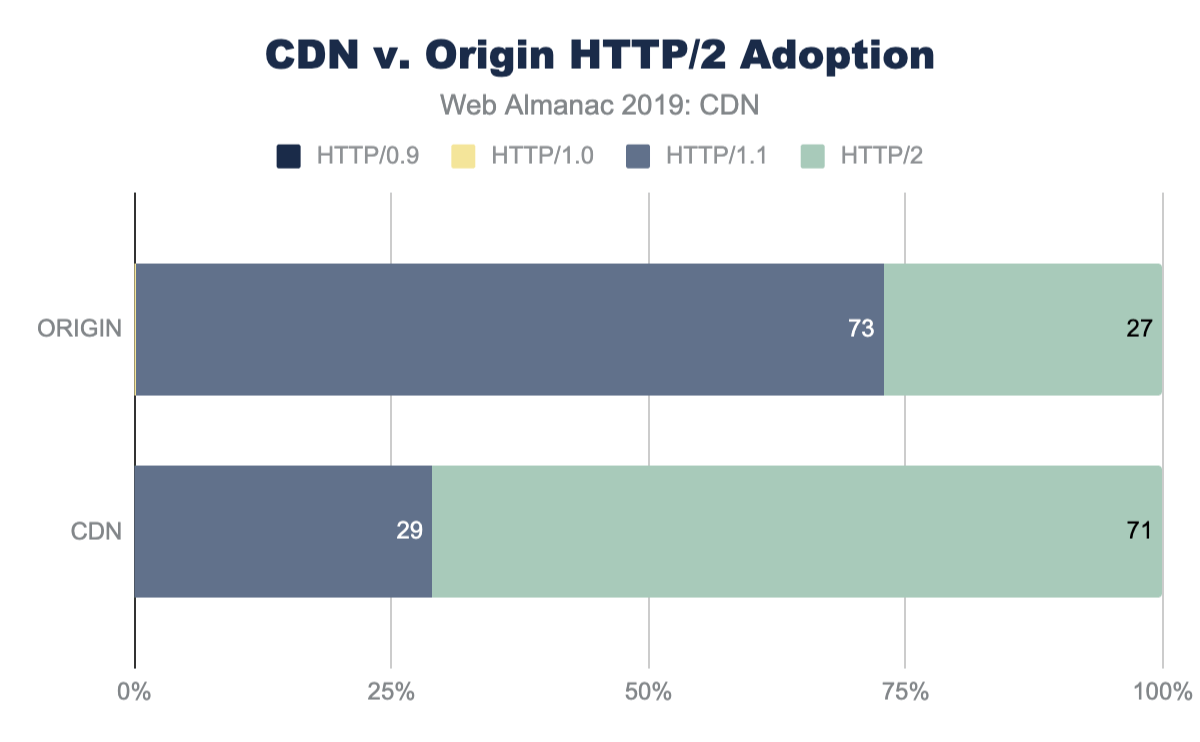

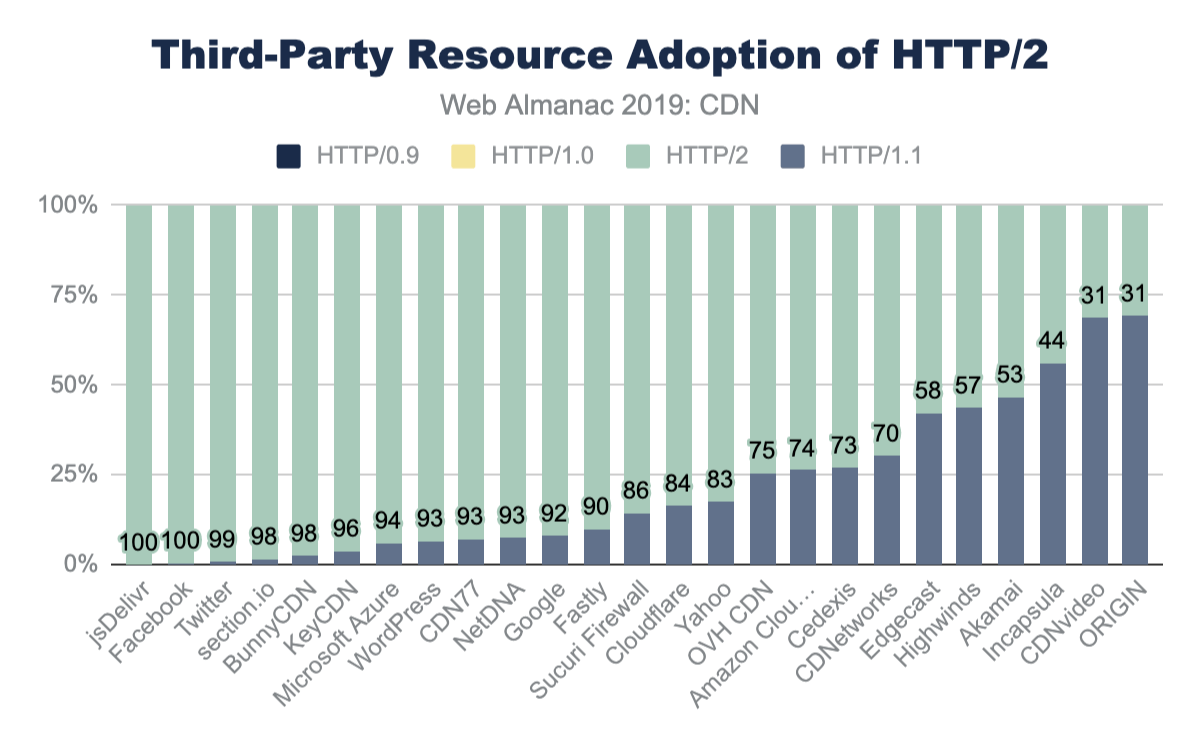

CDNs have over 70% adoption of HTTP/2, compared to the nearly 27% of origin pages. Similarly, sub-domain and third-party resources on CDNs see an even higher adoption of HTTP/2 at 90% or higher while third-party resources served from origin infrastructure only has 31% adoption. The performance gains and other features of HTTP/2 are further covered in the HTTP/2 chapter.

| HTTP/0.9 | HTTP/1.0 | HTTP/1.1 | HTTP/2 | |

|---|---|---|---|---|

| WordPress | 0 | 0 | 0.38 | 100 |

| Netlify | 0 | 0 | 1.07 | 99 |

| section.io | 0 | 0 | 1.56 | 98 |

| GoCache | 0 | 0 | 7.97 | 92 |

| NetDNA | 0 | 0 | 12.03 | 88 |

| Instart Logic | 0 | 0 | 12.36 | 88 |

| Microsoft Azure | 0 | 0 | 14.06 | 86 |

| Sucuri Firewall | 0 | 0 | 15.65 | 84 |

| Fastly | 0 | 0 | 16.34 | 84 |

| Cloudflare | 0 | 0 | 16.43 | 84 |

| Highwinds | 0 | 0 | 17.34 | 83 |

| Amazon CloudFront | 0 | 0 | 18.19 | 82 |

| OVH CDN | 0 | 0 | 25.53 | 74 |

| Limelight | 0 | 0 | 33.16 | 67 |

| Edgecast | 0 | 0 | 37.04 | 63 |

| Cedexis | 0 | 0 | 43.44 | 57 |

| Akamai | 0 | 0 | 47.17 | 53 |

| Myra Security CDN | 0 | 0.06 | 50.05 | 50 |

| 0 | 0 | 52.45 | 48 | |

| Incapsula | 0 | 0.01 | 55.41 | 45 |

| Yunjiasu | 0 | 0 | 70.96 | 29 |

| ORIGIN | 0 | 0.1 | 72.81 | 27 |

| Zenedge | 0 | 0 | 87.54 | 12 |

| CDNetworks | 0 | 0 | 88.21 | 12 |

| ChinaNetCenter | 0 | 0 | 94.49 | 6 |

| cdn | HTTP/0.9 | HTTP/1.0 | HTTP/1.1 | HTTP/2 |

|---|---|---|---|---|

| jsDelivr | 0 | 0 | 0 | 100 |

| 0 | 0 | 0 | 100 | |

| 0 | 0 | 1 | 99 | |

| section.io | 0 | 0 | 2 | 98 |

| BunnyCDN | 0 | 0 | 2 | 98 |

| KeyCDN | 0 | 0 | 4 | 96 |

| Microsoft Azure | 0 | 0 | 6 | 94 |

| WordPress | 0 | 0 | 7 | 93 |

| CDN77 | 0 | 0 | 7 | 93 |

| NetDNA | 0 | 0 | 7 | 93 |

| 0 | 0 | 8 | 92 | |

| Fastly | 0 | 0 | 10 | 90 |

| Sucuri Firewall | 0 | 0 | 14 | 86 |

| Cloudflare | 0 | 0 | 16 | 84 |

| Yahoo | 0 | 0 | 17 | 83 |

| OVH CDN | 0 | 0 | 26 | 75 |

| Amazon CloudFront | 0 | 0 | 26 | 74 |

| Cedexis | 0 | 0 | 27 | 73 |

| CDNetworks | 0 | 0 | 30 | 70 |

| Edgecast | 0 | 0 | 42 | 58 |

| Highwinds | 0 | 0 | 43 | 57 |

| Akamai | 0 | 0.01 | 47 | 53 |

| Incapsula | 0 | 0 | 56 | 44 |

| CDNvideo | 0 | 0 | 68 | 31 |

| ORIGIN | 0 | 0.07 | 69 | 31 |

Controlling CDN caching behavior

Vary

A website can control the caching behavior of browsers and CDNs with the use of different HTTP headers. The most common is the Cache-Control header which specifically determines how long something can be cached before returning to the origin to ensure it is up-to-date.

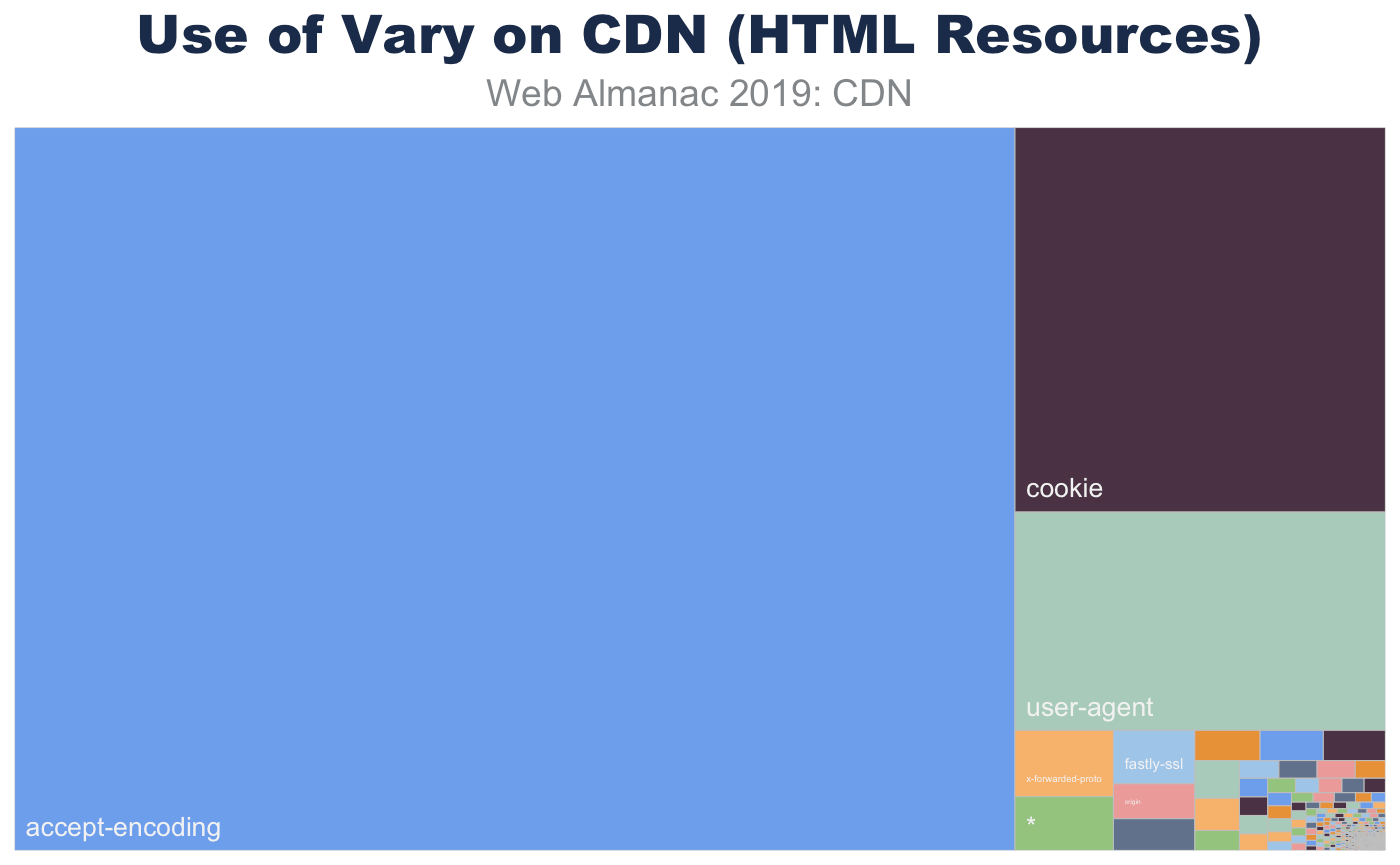

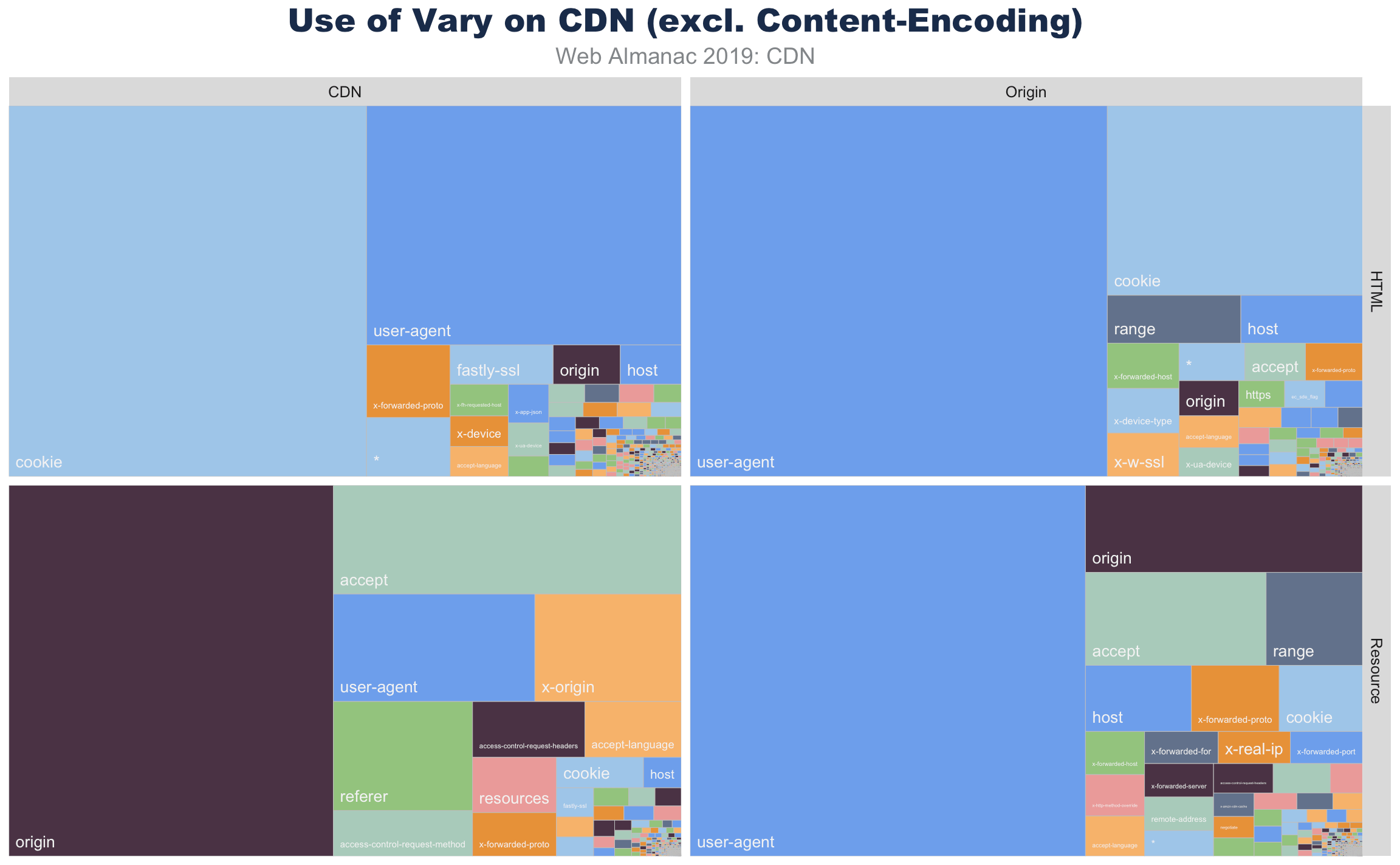

Another useful tool is the use of the Vary HTTP header. This header instructs both CDNs and browsers how to fragment a cache. The Vary header allows an origin to indicate that there are multiple representations of a resource, and the CDN should cache each variation separately. The most common example is compression. Declaring a resource as Vary: Accept-Encoding allows the CDN to cache the same content, but in different forms like uncompressed, with Gzip, or Brotli. Some CDNs even do this compression on the fly so as to keep only one copy available. This Vary header likewise also instructs the browser how to cache the content and when to request new content.

Vary for HTML served from CDNs.

While the main use of Vary is to coordinate Content-Encoding, there are other important variations that websites use to signal cache fragmentation. Using Vary also instructs SEO bots like DuckDuckGo, Google, and BingBot that alternate content would be returned under different conditions. This has been important to avoid SEO penalties for “cloaking” (sending SEO specific content in order to game the rankings).

For HTML pages, the most common use of Vary is to signal that the content will change based on the User-Agent. This is short-hand to indicate that the website will return different content for desktops, phones, tablets, and link-unfurling engines (like Slack, iMessage, and Whatsapp). The use of Vary: User-Agent is also a vestige of the early mobile era, where content was split between “mDot” servers and “regular” servers in the back-end. While the adoption for responsive web has gained wide popularity, this Vary form remains.

In a similar way, Vary: Cookie usually indicates that content that will change based on the logged-in state of the user or other personalization.

Vary usage for HTML and resources served from origin and CDN.

Resources, in contrast, don’t use Vary: Cookie as much as the HTML resources. Instead these resources are more likely to adapt based on the Accept, Origin, or Referer. Most media, for example, will use Vary: Accept to indicate that an image could be a JPEG, WebP, JPEG 2000, or JPEG XR depending on the browser’s offered Accept header. In a similar way, third-party shared resources signal that an XHR API will differ depending on which website it is embedded. This way, a call to an ad server API will return different content depending on the parent website that called the API.

The Vary header also contains evidence of CDN chains. These can be seen in Vary headers such as Accept-Encoding, Accept-Encoding or even Accept-Encoding, Accept-Encoding, Accept-Encoding. Further analysis of these chains and Via header entries might reveal interesting data, for example how many sites are proxying third-party tags.

Many of the uses of the Vary are extraneous. With most browsers adopting double-key caching, the use of Vary: Origin is redundant. As is Vary: Range or Vary: Host or Vary: *. The wild and variable use of Vary is demonstrable proof that the internet is weird.

Surrogate-Control, s-maxage, and Pre-Check

There are other HTTP headers that specifically target CDNs, or other proxy caches, such as the Surrogate-Control, s-maxage, pre-check, and post-check values in the Cache-Control header. In general usage of these headers is low.

Surrogate-Control allows origins to specify caching rules just for CDNs, and as CDNs are likely to strip the header before serving responses, its low visible usage isn’t a surprise, in fact it’s surprising that it’s actually in any responses at all! (It was even seen from some CDNs that state they strip it).

Some CDNs support post-check as a method to allow a resource to be refreshed when it goes stale, and pre-check as a maxage equivalent. For most CDNs, usage of pre-check and post-check was below 1%. Yahoo was the exception to this and about 15% of requests had pre-check=0, post-check=0. Unfortunately this seems to be a remnant of an old Internet Explorer pattern rather than active usage. More discussion on this can be found in the Caching chapter.

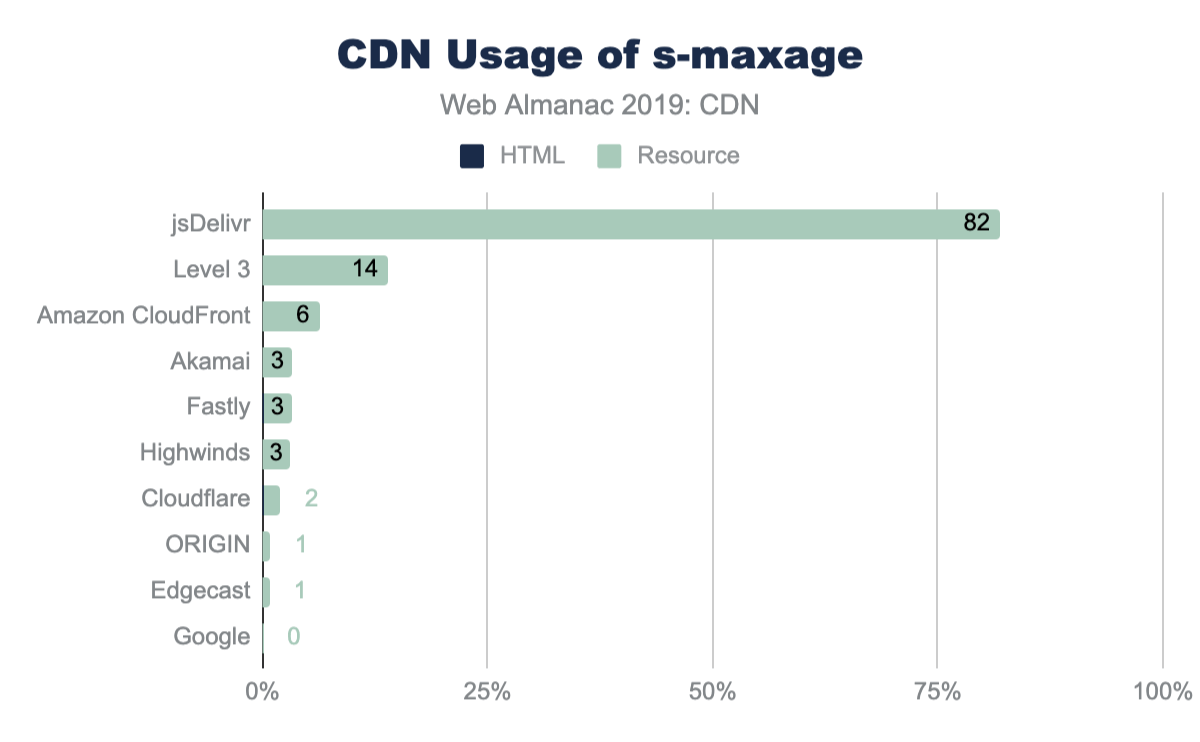

The s-maxage directive informs proxies for how long they may cache a response. Across the Web Almanac dataset, jsDelivr is the only CDN where a high level of usage was seen across multiple resources—this isn’t surprising given jsDelivr’s role as a public CDN for libraries. Usage across other CDNs seems to be driven by individual customers, for example third-party scripts or SaaS providers using that particular CDN.

s-maxage across CDN responses.

With 40% of sites using a CDN for resources, and presuming these resources are static and cacheable, the usage of s-maxage seems low.

Future research might explore cache lifetimes versus the age of the resources, and the usage of s-maxage versus other validation directives such as stale-while-revalidate.

CDNs for common libraries and content

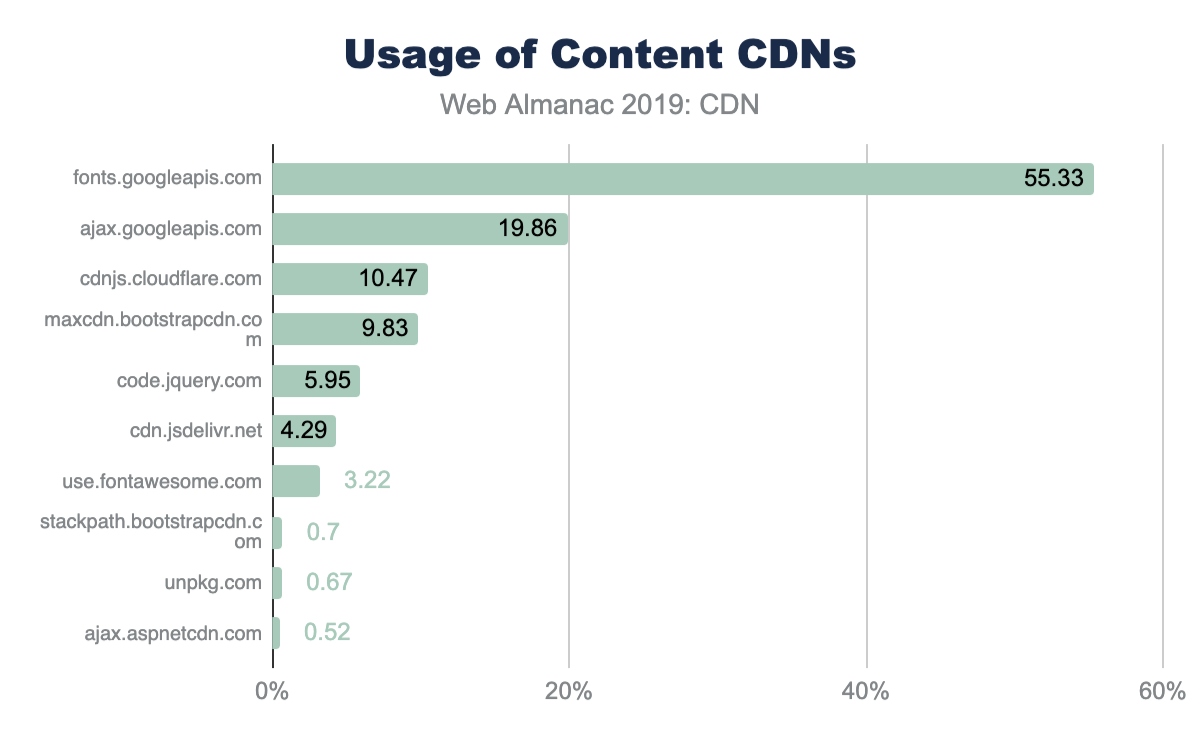

So far, this chapter has explored the use of commercials CDNs which the site may be using to host its own content, or perhaps used by a third-party resource included on the site.

Common libraries like jQuery and Bootstrap are also available from public CDNs hosted by Google, Cloudflare, Microsoft, etc. Using content from one of the public CDNs instead of a self-hosting the content is a trade-off. Even though the content is hosted on a CDN, creating a new connection and growing the congestion window may negate the low latency of using a CDN.

Google Fonts is the most popular of the content CDNs and is used by 55% of websites. For non-font content, Google API, Cloudflare’s JS CDN, and the Bootstrap’s CDN are the next most popular.

As more browsers implement partitioned caches, the effectiveness of public CDNs for hosting common libraries will decrease and it will be interesting to see whether they are less popular in future iterations of this research.

Conclusion

The reduction in latency that CDNs deliver along with their ability to store content close to visitors enable sites to deliver faster experiences while reducing the load on the origin.

Steve Souders’ recommendation to use a CDN remains as valid today as it was 12 years ago, yet only 20% of sites serve their HTML content via a CDN, and only 40% are using a CDN for resources, so there’s plenty of opportunity for their usage to grow further.

There are some aspects of CDN adoption that aren’t included in this analysis, sometimes this was due to the limitations of the dataset and how it’s collected, in other cases new research questions emerged during the analysis.

As the web continues to evolve, CDN vendors innovate, and sites use new practices CDN adoption remains an area rich for further research in future editions of the Web Almanac.