Methodology

Overview

The Web Almanac is a project organized by HTTP Archive. HTTP Archive was started in 2010 by Steve Souders with the mission to track how the web is built. It evaluates the composition of millions of web pages on a monthly basis and makes its terabytes of metadata available for analysis on BigQuery. Learn more about HTTP Archive.

The mission of the Web Almanac is to make the data warehouse of HTTP Archive even more accessible to the web community by having subject matter experts provide contextualized insights. You can think of it as an annual repository of knowledge about the state of the web, 2019 being its first edition.

The 2019 edition of the Web Almanac is comprised of four pillars: content, experience, publishing, and distribution. Each part of the written report represents a pillar and is made up of chapters exploring its different aspects. For example, Part II represents the user experience and includes the Performance, Security, Accessibility, SEO, PWA, and Mobile Web chapters.

About the dataset

The HTTP Archive dataset is continuously updating with new data monthly. For the 2019 edition of the Web Almanac, unless otherwise noted in the chapter, all metrics were sourced from the July 2019 crawl. These results are publicly queryable on BigQuery in tables prefixed with 2019_07_01.

All of the metrics presented in the Web Almanac are publicly reproducible using the dataset on BigQuery. You can browse the queries used by all chapters in our GitHub repository.

For example, to understand the median number of bytes of JavaScript per desktop and mobile page, see 01_01b.sql:

#standardSQL

# 01_01b: Distribution of JS bytes by client

SELECT

percentile,

_TABLE_SUFFIX AS client,

APPROX_QUANTILES(ROUND(bytesJs / 1024, 2), 1000)[OFFSET(percentile * 10)] AS js_kbytes

FROM

`httparchive.summary_pages.2019_07_01_*`,

UNNEST([10, 25, 50, 75, 90]) AS percentile

GROUP BY

percentile,

client

ORDER BY

percentile,

clientResults for each metric are publicly viewable in chapter-specific spreadsheets, for example JavaScript results.

Websites

There are 5,790,700 websites in the dataset. Among those, 5,297,442 are mobile websites and 4,371,973 are desktop websites. Most websites are included in both the mobile and desktop subsets.

HTTP Archive sources the URLs for its websites from the Chrome UX Report. The Chrome UX Report is a public dataset from Google that aggregates user experiences across millions of websites actively visited by Chrome users. This gives us a list of websites that are up-to-date and a reflection of real-world web usage. The Chrome UX Report dataset includes a form factor dimension, which we use to get all of the websites accessed by desktop or mobile users.

The July 2019 HTTP Archive crawl used by the Web Almanac used the most recently available Chrome UX Report release, May 2019 (201905), for its list of websites. This dataset was released on June 11, 2019 and captures websites visited by Chrome users during the month of May.

Due to resource limitations, the HTTP Archive can only test one page from each website in the Chrome UX report. To reconcile this, only the home pages are included. Be aware that this will introduce some bias into the results because a home page is not necessarily representative of the entire website.

HTTP Archive is also considered a lab testing tool, meaning it tests websites from a datacenter and does not collect data from real-world user experiences. Therefore, all website home pages are tested with an empty cache in a logged out state.

Metrics

HTTP Archive collects metrics about how the web is built. It includes basic metrics like the number of bytes per page, whether the page was loaded over HTTPS, and individual request and response headers. The majority of these metrics are provided by WebPageTest, which acts as the test runner for each website.

Other testing tools are used to provide more advanced metrics about the page. For example, Lighthouse is used to run audits against the page to analyze its quality in areas like accessibility and SEO. The Tools section below goes into each of these tools in more detail.

To work around some of the inherent limitations of a lab dataset, the Web Almanac also makes use of the Chrome UX Report for metrics on user experiences, especially in the area of web performance.

Some metrics are completely out of reach. For example, we don’t necessarily have the ability to detect the tools used to build a website. If a website is built using create-react-app, we could tell that it uses the React framework, but not necessarily that a particular build tool is used. Unless these tools leave detectible fingerprints in the website’s code, we’re unable to measure their usage.

Other metrics may not necessarily be impossible to measure but are challenging or unreliable. For example, aspects of web design are inherently visual and may be difficult to quantify, like whether a page has an intrusive modal dialog.

Tools

The Web Almanac is made possible with the help of the following open source tools.

WebPageTest

WebPageTest is a prominent web performance testing tool and the backbone of HTTP Archive. We use a private instance of WebPageTest with private test agents, which are the actual browsers that test each web page. Desktop and mobile websites are tested under different configurations:

| Config | Desktop | Mobile |

|---|---|---|

| Device | Linux VM | Emulated Moto G4 |

| User Agent | Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36 PTST/190704.170731 | Mozilla/5.0 (Linux; Android 6.0.1; Moto G (4) Build/MPJ24.139-64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.146 Mobile Safari/537.36 PTST/190628.140653 |

| Location |

Redwood City, California, USA The Dalles, Oregon, USA |

Redwood City, California, USA The Dalles, Oregon, USA |

| Connection | Cable (5/1 Mbps 28ms RTT) | 3G (1.600/0.768 Mbps 300ms RTT) |

| Viewport | 1024 x 768px | 512 x 360px |

Desktop websites are run from within a desktop Chrome environment on a Linux VM. The network speed is equivalent to a cable connection.

Mobile websites are run from within a mobile Chrome environment on an emulated Moto G4 device with a network speed equivalent to a 3G connection. Note that the emulated mobile User Agent self-identifies as Chrome 65 but is actually Chrome 75 under the hood.

There are two locations from which tests are run: California and Oregon USA. HTTP Archive maintains its own test agent hardware located in the Internet Archive datacenter in California. Additional test agents in Google Cloud Platform’s us-west-1 location in Oregon are added as needed.

HTTP Archive’s private instance of WebPageTest is kept in sync with the latest public version and augmented with custom metrics. These are snippets of JavaScript that are evaluated on each website at the end of the test. The almanac.js custom metric includes several metrics that were otherwise infeasible to calculate, for example those that depend on DOM state.

The results of each test are made available as a HAR file, a JSON-formatted archive file containing metadata about the web page.

Lighthouse

Lighthouse is an automated website quality assurance tool built by Google. It audits web pages to make sure they don’t include user experience antipatterns like unoptimized images and inaccessible content.

HTTP Archive runs the latest version of Lighthouse for all of its mobile web pages — desktop pages are not included because of limited resources. As of the July 2019 crawl, HTTP Archive used the 5.1.0 version of Lighthouse.

Lighthouse is run as its own distinct test from within WebPageTest, but it has its own configuration profile:

| Config | Value |

|---|---|

| CPU slowdown | 1x* |

| Download throughput | 1.6 Mbps |

| Upload throughput | 0.768 Mbps |

| RTT | 150 ms |

For more information about Lighthouse and the audits available in HTTP Archive, refer to the Lighthouse developer documentation.

Wappalyzer

Wappalyzer is a tool for detecting technologies used by web pages. There are 65 categories of technologies tested, ranging from JavaScript frameworks, to CMS platforms, and even cryptocurrency miners. There are over 1,200 supported technologies.

HTTP Archive runs the latest version of Wappalyzer for all web pages. As of July 2019 the Web Almanac used the 5.8.3 version of Wappalyzer.

Wappalyzer powers many chapters that analyze the popularity of developer tools like WordPress, Bootstrap, and jQuery. For example, the Ecommerce and CMS chapters rely heavily on the respective Ecommerce and CMS categories of technologies detected by Wappalyzer.

All detection tools, including Wappalyzer, have their limitations. The validity of their results will always depend on how accurate their detection mechanisms are. The Web Almanac will add a note in every chapter where Wappalyzer is used but its analysis may not be accurate due to a specific reason.

Chrome UX Report

The Chrome UX Report is a public dataset of real-world Chrome user experiences. Experiences are grouped by websites’ origin, for example https://www.example.com. The dataset includes distributions of UX metrics like paint, load, interaction, and layout stability. In addition to grouping by month, experiences may also be sliced by dimensions like country-level geography, form factor (desktop, phone, tablet), and effective connection type (4G, 3G, etc.).

For Web Almanac metrics that reference real-world user experience data from the Chrome UX Report, the July 2019 dataset (201907) is used.

You can learn more about the dataset in the Using the Chrome UX Report on BigQuery guide on web.dev.

Third Party Web

Third Party Web is a research project by Patrick Hulce, author of the Third Parties chapter, that uses HTTP Archive and Lighthouse data to identify and analyze the impact of third party resources on the web.

Domains are considered to be a third party provider if they appear on at least 50 unique pages. The project also groups providers by their respective services in categories like ads, analytics, and social.

Several chapters in the Web Almanac use the domains and categories from this dataset to understand the impact of third parties.

Rework CSS

Rework CSS is a JavaScript-based CSS parser. It takes entire stylesheets and produces a JSON-encoded object distinguishing each individual style rule, selector, directive, and value.

This special purpose tool significantly improved the accuracy of many of the metrics in the CSS chapter. CSS in all external stylesheets and inline style blocks for each page were parsed and queried to make the analysis possible. See this thread for more information about how it was integrated with the HTTP Archive dataset on BigQuery.

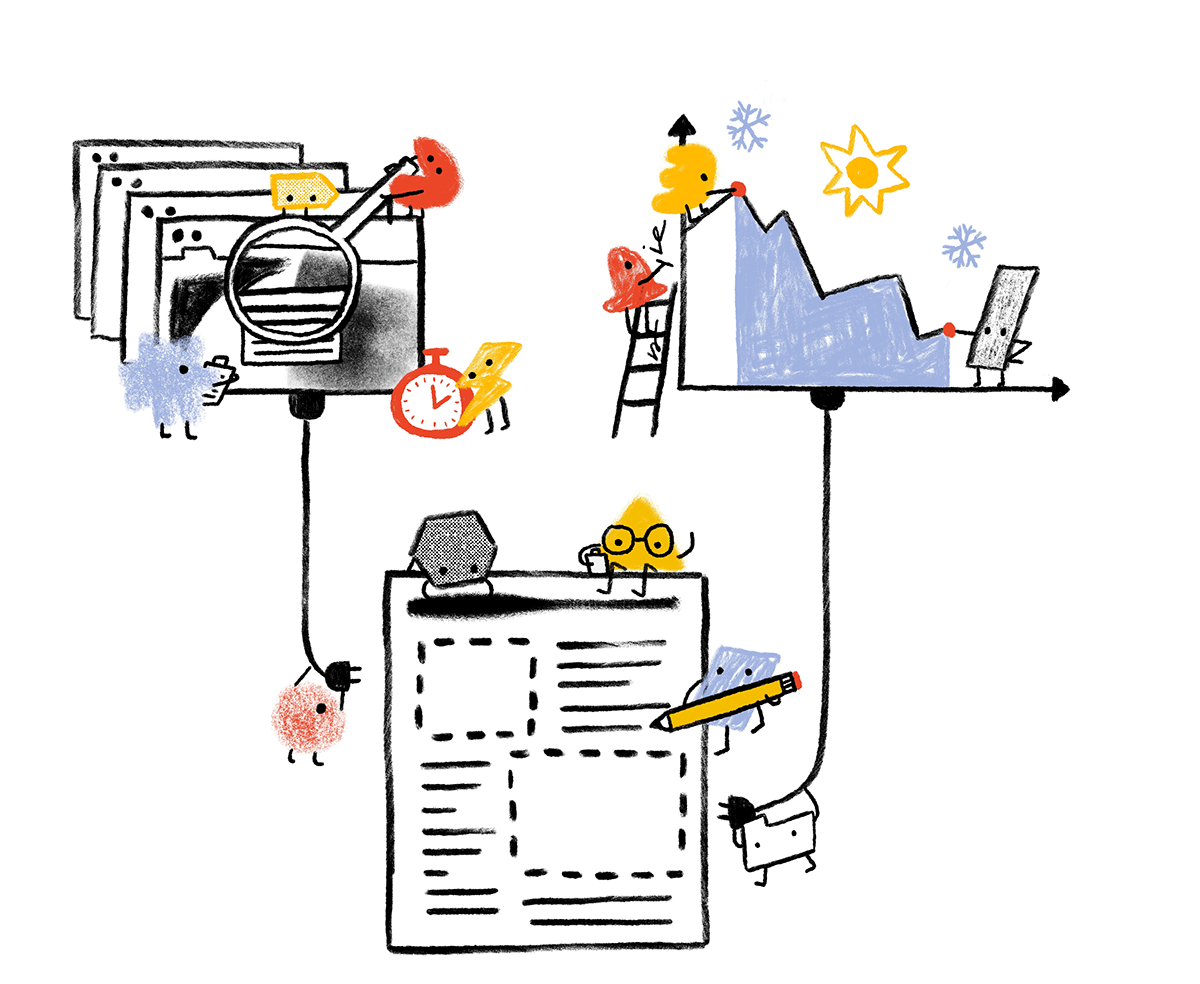

Analytical process

The Web Almanac took about a year to plan and execute with the coordination of dozens of contributors from the web community. This section describes why we chose the metrics you see in the Web Almanac, how they were queried, and interpreted.

Brainstorming

The inception of the Web Almanac started in January 2019 as a post on the HTTP Archive forum describing the initiative and gathering support. In March 2019 we created a public brainstorming doc in which anyone in the web community could write-in ideas for chapters or metrics. This was a critical step to ensure we were focusing on things that matter to the community and have a diverse set of voices included in the process.

As a result of the brainstorming, 20 chapters were solidified and we began assigning subject matter experts and peer reviewers to each chapter. This process had some inherent bias because of the challenge of getting volunteers to commit to a project of this scale. Thus, many of the contributors are members of the same professional circles. One explicit goal for future editions of the Web Almanac is to encourage even more inclusion of underrepresented and heterogeneous voices as authors and peer reviewers.

We spent May through June 2019 pairing people with chapters and getting their input to finalize the individual metrics that will make up each chapter.

Analysis

In June 2019, with the stable list of metrics and chapters, data analysts triaged the metrics for feasibility. In some cases, custom metrics needed to be created to fill gaps in our analytic capabilities.

Throughout July 2019, the HTTP Archive data pipeline crawled several million websites, gathering the metadata to be used in the Web Almanac.

Starting in August 2019, the data analysts began writing queries to extract the results for each metric. In total, 431 queries were written by hand! You can browse all of the queries by chapter in the sql/2019 directory of the project’s GitHub repository.

Interpretation

Authors worked with analysts to correctly interpret the results and draw appropriate conclusions. As authors wrote their respective chapters, they drew from these statistics to support their framing of the state of the web. Peer reviewers worked with authors to ensure the technical correctness of their analysis.

To make the results more easily understandable to readers, web developers and analysts created data visualizations to embed in the chapter. Some visualizations are simplified to make the conclusions easier to grasp. For example, rather than showing a full histogram of a distribution, only a handful of percentiles are shown. Unless otherwise noted, all distributions are summarized using percentiles, especially medians (50th percentile), and not averages.

Finally, editors revised the chapters to fix simple grammatical errors and ensure consistency across the reading experience.

Looking ahead

The 2019 edition of the Web Almanac is the first of what we hope to be an annual tradition in the web community of introspection and a commitment to positive change. Getting to this point has been a monumental effort thanks to many dedicated contributors and we hope to leverage as much of this work as possible to make future editions even more streamlined.

If you’re interested in contributing to the 2020 edition of the Web Almanac, please fill out our interest form. We’d love to hear your ideas for making this project even better!